Learn OpenGL

介绍

开始

window

GLFW

a library, written in C, specifically targeted at OpenGL

gives us the bare necessities required for rendering goodies to the screen.

GLAD

an open source library

triangle

shaders

small programs on GPU for each step of pipeline

language: GLSL(OpenGL Shading Language)

pipeline

(primitive assembly stage)

first: vertex shader;

in:single vertex;

main for transform 3d coord

out:

(optional) geometry shader

geometry shader

in: vetices collection

to generate shapes

out: rasterization stage

(rasterization stage)

clipping; discard all fragments outside your view

out: fragment shader

fragment shader

calculate final color of a pixel

like lights, shadows, color of the light…

alpha test and blending stage

vertex buffer objects (VBO)

store a large number of vertices in the GPU’s memory.

buffer type:

GL_ARRAY_BUFFERSending data to the graphics card from the CPU is relatively slow, so wherever we can we try to send as much data as possible at once

new call: glBindBuffer

copy call: glBufferData

GL DRAW:

GL_STREAM_DRAW: the data is set only once and used by the GPU at most a few times.GL_STATIC_DRAW: the data is set only once and used many times.GL_DYNAMIC_DRAW: the data is changed a lot and used many times.

vertex shader

input;

layout (location = 0)

uniforms

global;

setting attributes that may change every frame

interchanging data between your application and your shaders

any form that manually specify

tightly packed; offset/stride

glVertexAttribPointertell OpenGL how it should interpret the vertex data (per vertex attribute)

glEnableVertexAttribArrayenable the vertex attribute

vertex attributes are disabled by default.

predefined

gl_Position; output of the vertex shader

compiling shader

glCreateShaderglShaderSourceglCompileShader

vertex array object (VAO)

Calls to

glEnableVertexAttribArrayorglDisableVertexAttribArray.Vertex attribute configurations via

glVertexAttribPointer.Vertex buffer objects associated with vertex attributes by calls to

glVertexAttribPointer.

element buffer objects (EBO)

indexed drawing

stores indices that OpenGL uses to decide what vertices to draw.

textures

a 2D image (even 1D and 3D textures exist) used to add detail to an object

texture coordinate

uv: bl(0,0) -> tr(1,1)

sampling

Retrieving the texture color using texture coordinates

Texture Wrapping

specify coordinates outside of (0,1)

GL_REPEAT: The default behavior for textures. Repeats the texture image.GL_MIRRORED_REPEAT: Same as GL_REPEAT but mirrors the image with each repeat.GL_CLAMP_TO_EDGE: Clamps the coordinates between 0 and 1. The result is that higher coordinates become clamped to the edge, resulting in a stretched edge pattern.GL_CLAMP_TO_BORDER: Coordinates outside the range are now given a user-specified border color.

glTexParameter

Texture Filtering

for sharp edges

GL_NEARESTGL_LINEAR

Mipmaps

sqrt

high resolution for far object

low resolution for near object

glGenerateMipmapfiltering

loading and creating

texture(texture, corrd)in GLSLTexture Units

glActiveTextureglBindTextureglTexImage2D

Transformations

vector

matrix

transition $$ \begin{bmatrix} S1&0&0&Tx\ 0&S2&0&Ty\ 0&0&S3&Tz\ 0&0&0&1 \end{bmatrix}* \begin{bmatrix} x\ y\ z\ 1\ \end{bmatrix}= \begin{bmatrix} S1x+Tx\ S2y+Ty\ S3*z+Tz\ 1\ \end{bmatrix} $$

rotation

GLM

OpenGL Mathematics

glm::vec4glm::mat4glm::translateglm::rotateglm::scaleglm::radians…

坐标系

Local space (or Object space)

World space

View space (or Eye space)

camera

Clip space

projection matix

glm::ortho

perspective projection

glm::perspective

Screen space

putting it all together

$V_{clip} = M_{projection}* M_{view} * M_{model}* V_{local}$

the result ->

gl_Position

z-buffer

depth-testing

decide when to draw over a pixel or not

glEnable(GL_DEPTH_TEST)glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT)

Camera

position

direction

right axis

up axis

look at $$ LookAt = \begin{bmatrix} R_x&R_y&R_z&0\ U_x&U_y&U_z&0\ D_x&D_y&D_z&0\ 0&0&0&1\ \end{bmatrix} * \begin{bmatrix} 1&0&0&-P_x\ 0&1&0&-P_y\ 0&0&1&-P_z\ 0&0&0&1\ \end{bmatrix} \= \begin{bmatrix} R_x&R_y&R_z&-R_xP_x-R_yP_y-R_zP_z\ U_x&U_y&U_z&-U_xP_x-U_yP_y-U_zP_z\ D_x&D_y&D_z&-D_xP_x-D_yP_y-D_z*P_z\ 0&0&0&1\ \end{bmatrix} $$

Euler angles

pitch; x axis

yaw; y axis

roll; z axis

zoom

fov; field of view

Review (Copy)

OpenGL: a formal specification of a graphics API that defines the layout and output of each function.

GLAD: an extension loading library that loads and sets all OpenGL’s function pointers for us so we can use all (modern) OpenGL’s functions.

Viewport: the 2D window region where we render to.

Graphics Pipeline: the entire process vertices have to walk through before ending up as one or more pixels on the screen.

Shader: a small program that runs on the graphics card. Several stages of the graphics pipeline can use user-made shaders to replace existing functionality.

Vertex: a collection of data that represent a single point.

Normalized Device Coordinates: the coordinate system your vertices end up in after perspective division is performed on clip coordinates. All vertex positions in NDC between -1.0 and 1.0 will not be discarded or clipped and end up visible.

Vertex Buffer Object: a buffer object that allocates memory on the GPU and stores all the vertex data there for the graphics card to use.

Vertex Array Object: stores buffer and vertex attribute state information.

Element Buffer Object: a buffer object that stores indices on the GPU for indexed drawing.

Uniform: a special type of GLSL variable that is global (each shader in a shader program can access this uniform variable) and only has to be set once.

Texture: a special type of image used in shaders and usually wrapped around objects, giving the illusion an object is extremely detailed.

Texture Wrapping: defines the mode that specifies how OpenGL should sample textures when texture coordinates are outside the range: (0, 1).

Texture Filtering: defines the mode that specifies how OpenGL should sample the texture when there are several texels (texture pixels) to choose from. This usually occurs when a texture is magnified.

Mipmaps: stored smaller versions of a texture where the appropriate sized version is chosen based on the distance to the viewer.

stb_image: image loading library.

Texture Units: allows for multiple textures on a single shader program by binding multiple textures, each to a different texture unit.

Vector: a mathematical entity that defines directions and/or positions in any dimension.

Matrix: a rectangular array of mathematical expressions with useful transformation properties.

GLM: a mathematics library tailored for OpenGL.

Local Space: the space an object begins in. All coordinates relative to an object’s origin.

World Space: all coordinates relative to a global origin.

View Space: all coordinates as viewed from a camera’s perspective.

Clip Space: all coordinates as viewed from the camera’s perspective but with projection applied. This is the space the vertex coordinates should end up in, as output of the vertex shader. OpenGL does the rest (clipping/perspective division).

Screen Space: all coordinates as viewed from the screen. Coordinates range from 0 to screen width/height.

LookAt: a special type of view matrix that creates a coordinate system where all coordinates are rotated and translated in such a way that the user is looking at a given target from a given position.

Euler Angles: defined as yaw, pitch and roll that allow us to form any 3D direction vector from these 3 values.

Light

Colors

rgb

light

reflected from objects

PBR

Basic Lighting

Phong lighting model

ambient lighting

diffuse lighting

normal vector

translations should not have any effect on the normal vectors

normal matrix; remove the effect of wrongly scaling

Normal = mat3(transpose(inverse(model))) * aNormalcalculate the normal matrix on the CPU and send it to the shaders via a uniform before drawing (just like the model matrix).

specular lighting

Materials

struct Material {

vec3 ambient;

vec3 diffuse;

vec3 specular;

float shininess;

};

struct Light {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

Lighting maps

diffuse maps

specular maps

struct Material {

sampler2D diffuse;

sampler2D specular;

float shininess;

};

other map

normal/bump maps

reflection maps

Light Casters

direction light

direction

point light

attenuation: $$F_{att} = \frac{1.0}{K_c + K_l * d + K_q * d^2} $$

spot light

direction

cutoff angle

smooth / soft edges $$I = \frac{\theta - \gamma}{\epsilon}$$

Multiple Lights

Review

Color vector: a vector portraying most of the real world colors via a combination of red, green and blue components (abbreviated to RGB). The color of an object is the reflected color components that an object did not absorb.

Phong lighting model: a model for approximating real-world lighting by computing an ambient, diffuse and specular component.

Ambient lighting: approximation of global illumination by giving each object a small brightness so that objects aren’t completely dark if not directly lit.

Diffuse shading: lighting that gets stronger the more a vertex/fragment is aligned to a light source. Makes use of normal vectors to calculate the angles.

Normal vector: a unit vector that is perpendicular to a surface.

Normal matrix: a 3x3 matrix that is the model (or model-view) matrix without translation. It is also modified in such a way (inverse-transpose) that it keeps normal vectors facing in the correct direction when applying non-uniform scaling. Otherwise normal vectors get distorted when using non-uniform scaling.

Specular lighting: sets a specular highlight the closer the viewer is looking at the reflection of a light source on a surface. Based on the viewer’s direction, the light’s direction and a shininess value that sets the amount of scattering of the highlight.

Phong shading: the Phong lighting model applied in the fragment shader.

Gouraud shading: the Phong lighting model applied in the vertex shader. Produces noticeable artifacts when using a small number of vertices. Gains efficiency for loss of visual quality.

GLSL struct: a C-like struct that acts as a container for shader variables. Mostly used for organizing input, output, and uniforms.

Material: the ambient, diffuse and specular color an object reflects. These set the colors an object has.

Light (properties): the ambient, diffuse and specular intensity of a light. These can take any color value and define at what color/intensity a light source shines for each specific Phong component.

Diffuse map: a texture image that sets the diffuse color per fragment.

Specular map: a texture map that sets the specular intensity/color per fragment. Allows for specular highlights only on certain areas of an object.

Directional light: a light source with only a direction. It is modeled to be at an infinite distance which has the effect that all its light rays seem parallel and its direction vector thus stays the same over the entire scene.

Point light: a light source with a location in a scene with light that fades out over distance.

Attenuation: the process of light reducing its intensity over distance, used in point lights and spotlights.

Spotlight: a light source that is defined by a cone in one specific direction.

Flashlight: a spotlight positioned from the viewer’s perspective.

GLSL uniform array: an array of uniform values. Work just like a C-array, except that they can’t be dynamically allocated.

Model Loading

Assimp(Open Asset Import Library)

3D modeling tools, uv-mapping

file formats

.obj, wavefront

Assimp

import different model files formats -> Assimp’s data structure

Mesh

a class

Model

Advanced OpenGL

Depth Testing

depth-buffer

stores information per fragment

same width and height as color buffer

16/24/32 bit floats

done in screen space after the fragment shader has run

early depth testing: before fragment shader run

glEnable(GL_DEPTH_TEST);glDepthFunc‘GL_ALWAYS’

‘GL_NEVER’

‘GL_LESS’

…

depth value precision

linear buffer; never used $$F_{depth} = \frac{z-near}{far-near}$$

non-linear $$F_{depth} = \frac{1/z-1/near}{1/far-1/near}$$

z-fighting

two planes or triangles are to closely aligned

no enough precision to figure out front one

prevent z-fighting

never place objects too close to each other in a way that some of their triangles closely overlap.

set the near plane as far as possible

use a higher precision depth buffer; (cost of some performance)

Stencil Testing

discard fragment

stencil buffer

8 bits per stencil value

total of 256 different stencil values per pixel

use

Enable writing to the stencil buffer.

Render objects, updating the content of the stencil buffer.

Disable writing to the stencil buffer.

Render (other) objects, this time discarding certain fragments based on the content of the stencil buffer.

enable

glEnable(GL_STENCIL_TEST)need to clear stencil buffer each iteration

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT | GL_STENCIL_BUFFER_BIT)

glStencilMask(0xFF);// each bit is written to the stencil buffer as isglStencilMask(0x00);// each bit ends up as 0 in the stencil buffer (disabling writes)

stencil functions;

when a stencil test should pass or fail

glStencilFunc

glStencilFunc(GL_EQUAL, 1, 0xFF); whenever the stencil value of a fragment is equal to 1, the fragment passes the test and is drawn, otherwise discarded

glStencilOp

default:

glStencilOp(GL_KEEP, GL_KEEP, GL_KEEP)

object outlining

Enable stencil writing.

Set the stencil op to GL_ALWAYS before drawing the (to be outlined) objects, updating the stencil buffer with 1s wherever the objects’ fragments are rendered.

Render the objects.

Disable stencil writing and depth testing.

Scale each of the objects by a small amount.

Use a different fragment shader that outputs a single (border) color.

Draw the objects again, but only if their fragments’ stencil values are not equal to 1.

Enable depth testing again and restore stencil func to GL_KEEP.

Blending

the technique to implement transparency within objects

discarding framents

only display some parts of texture and ignore others

discard

blending

GL_BLENDequation $$\overline{C}{result} = \overline{C}{source} * F_{source} + \overline{C}{destination} * F{destination}$$

C: color vector

F: factor vector

source: currently stored in the color buffer; top one;

destination: output of the fragment shader; bottom one;

glBlendFunc(GLenum sfactor, GLenum dfactor)common:

GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA

render semi-transparent textures

issue: transparent parts of front one are occluding the one in the background.

depth test discards them

don’t break the order

draw most distant object first and the closet object last;

outline

draw all opaque objects first

sort all the transparent objects

draw all the transparent objects in sorted order

advanced techniques

order independent transparency

Face Culling

winding order

clockwise or counter-clockwise -> front-facing or back-facing

enable

glEnable(GL_CULL_FACE)glCullFace(GL_FRONT)GL_BACK: Culls only the back faces.

GL_FRONT: Culls only the front faces.

GL_FRONT_AND_BACK: Culls both the front and back faces.

Framebuffers

e.g.

color buffer

depth buffer

stencil buffer

these buffers is stored somewhere in GPU memory and is called framebuffer

default buffers is created and configured when window created

some use cases

scene mirror

post-processing effects

creating a framebuffer

create a framebuffer object;

glGenFramebuffersbind it as the active frambuffer;

glBindFramebufferdo some operations

GL_READ_FRAMEBUFFER

GL_DRAW_FRAMEBUFFER

glReadPixelsIf you want all rendering operations to have a visual impact again on the main window we need to make the default framebuffer active by binding to 0:

glBindFramebuffer(GL_FRAMEBUFFER, 0)texture attachments

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, texture, 0)attachment can be color, stencil, depth

unbind the framebuffer

render to a texture

glFramebufferRenderbufferto draw the scene to a single texture we’ll have to take the following steps:

Render the scene as usual with the new framebuffer bound as the active framebuffer.

Bind to the default framebuffer.

Draw a quad that spans the entire screen with the new framebuffer’s color buffer as its texture.

post-processing

Inversion

Grayscale

Kernel effects

kernel or convolution matrix(卷积矩阵)

e.g. a sharpen kernel $$ \begin{bmatrix} 2&2&2\ 2&-15&2\ 2&2&2\ \end{bmatrix} $$

Most kernels you’ll find over the internet all sum up to 1 if you add all the weights together. If they don’t add up to 1 it means that the resulting texture color ends up brighter or darker than the original texture value.

e.g. blur kernel; $$ \begin{bmatrix} 1&2&1\ 2&4&2\ 1&2&1\ \end{bmatrix} / 16 $$

effect of drunk/no glasses

e.g. edge detection kernel; $$ \begin{bmatrix} 1&1&1\ 1&-8&1\ 1&1&1\ \end{bmatrix} $$

highlights all edges

darken the rest

Cubemaps

combine multiple textures

6 individual 2D textures

glBindTexture(GL_TEXTURE_CUBE_MAP, textureID);skybox

if render skybox first, easily been discarded by early depth testing

trick

render skybox last

trick depth buffer into believing that skybox has the max depth value of 1.0

so that it fails the depth test wherever there’s a different objects in front of it

void main() { TexCoords = aPos; vec4 pos = projection * view * vec4(aPos, 1.0); gl_Position = pos.xyww; }

change depth function to

GL_LEQUALinstead of the DefaultGL_LESS

Environment mapping

reflection

calculate reflection vector

sample from cubemap

refraction

Snell’s law

Dynamic environment maps

a lot of tricks

…omit

Advanced Data

Batching vertex attributes

copying buffers

Advanced GLSL

GLSL’s built-in variables

gl_Position: The output position of the vertex shader, in clip space.gl_FragCoord: The window-relative coordinate of the fragment being processed.gl_FragColor: The output color of the fragment shader.gl_PointCoord: The coordinate of the current point being processed when rendering points.gl_ClipDistance: An array of values that indicate whether a primitive should be discarded based on its distance from the camera.gl_FragDepth: The depth value of the fragment being processed, used to write to the depth buffer.gl_PointSize: The size of the point being rendered.gl_FrontFacing: A Boolean value indicating whether the primitive being processed is front-facing or back-facing.gl_MaxDrawBuffers: The maximum number of draw buffers supported by the system.gl_MaxPatchVertices: The maximum number of vertices per patch supported by the system.gl_SampleID: The identifier of the current sample being processed when multisampling.gl_SampleMask: A mask indicating which samples are written by the fragment shader.gl_SampleMaskIn: A mask indicating which samples are covered by the primitive being processed.gl_NumSamples: The number of samples per pixel in the current context.

Interface blocks

Uniform buffer objects |Type|Layout rule| |–|–| |Scalar| e.g. int or bool Each scalar has a base alignment of N.| |Vector| Either 2N or 4N. This means that a vec3 has a base alignment of 4N.| |Array| of scalars or vectors Each element has a base alignment equal to that of a vec4.| |Matrices| Stored as a large array of column vectors, where each of those vectors has a base alignment of vec4.| |Struct| Equal to the computed size of its elements according to the previous rules, but padded to a multiple of the size of a vec4.|

Geometry Shader

operate on individual primitives(points,lines,triangles)

executed after the vertex shader stage and before the rasterization stage

optional supported on some graphics

e.g.

visualizing normal vectors

exploding objects

Instancing

render multiple instances of a single object

use VBOs to store the attributes of the instances

draw instances

glDrawArraysInstanced

primitives defined by arrays of vertices

glDrawElementsInstanced

primitives defined by indices into arrays of vertices

instance ID

Anti Aliasing

jagged edges

SSAA, super smaple anti-aliasing

MSAA, multisample anti-aliasing

Advanced Lighting

Adavance Lighting

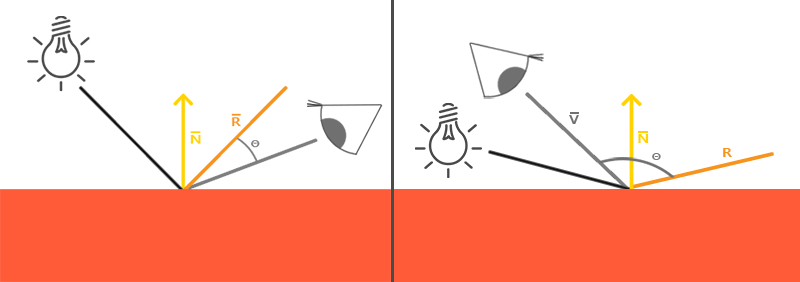

Blinn-Phong

$$

\overline{H} = \frac{\overline{L} + \overline{V}}{||\overline{L} + \overline{V}||}

$$

$$

\overline{H} = \frac{\overline{L} + \overline{V}}{||\overline{L} + \overline{V}||}

$$

Gamma Correction

all the color and brightness options we configure in our applications are based on what we perceive from the monitor and thus all the options are actually non-linear brightness/color options.

Shadows

Shadow Mapping

outline

Set up a light source: Choose a light source in the scene and set its position and direction.

Render the scene from the light’s point of view: Render the scene from the light’s point of view and store the depth information of each pixel in a texture known as a shadow map. This is typically done using a specialized pass of the rendering pipeline.

Compare the depth information of each pixel: During the main rendering pass, compare the depth of each pixel with the depth information stored in the shadow map. If the depth of a pixel is greater than the depth stored in the shadow map, then that pixel is in shadow.

Apply shadows to objects in the scene: Based on the comparison between the depth information of each pixel and the shadow map, shadows are applied to the objects in the scene by adjusting the color or brightness of each pixel.

Repeat for multiple lights: If there are multiple light sources in the scene, the process can be repeated for each light to generate multiple shadow maps, which can be combined to produce a more accurate representation of shadows in the scene.

Point Shadows

Omnidirectional shadow maps

PCF, Percentage-closer filtering

Normal Mapping

tangent space

local to the surface of a triangle

Tangent

right

Normal

up, normal vector

Bitangent

forward

Parallax Mapping

视差

displacement mapping like

outline

a height map; depth of each point of a surface

a normal map; from height map

apply offset; view angle + height

only used for 2D and static env

for dynamic env

height map with dynamic tessellation

displacement mapping

normal mapping with dynamic lighting

volumetric fog and shadows

HDR

high dynamic range

tone mapping

with gamma correction

Bloom

all brightly lit region -> glow-like effect (halo)

often with HDR

//TODO

Deferred Shading

vs. forward rendering/shading

//TODO

SSAO

AO: ambient occlusion, 环境光遮蔽

SS: screen-space

a great sense of depth

first use in Crysis(2007, Crytek)

The technique uses a scene’s depth buffer in screen-space to determine the amount of occlusion instead of real geometrical data.

occlusion factor

each fragment on a screen-filled quad

used to reduce or nullify the fragment’s ambient lighting component

obtained by taking multiple depth samples in a sphere sample kernel surrounding the fragment position

compare each of the samples with the current fragment’s depth value

sample count

too low -> banding

too high -> lose performance

randomly rotating the sample kernel

noise pattern

blurring

sample kernel used was a sphere -> flat walls look gray -> Crysis visual style

a hemisphere sample kernel oriented along a surface’s normal vector

an accelerating interpolation function -> most samples closer to its origin

Random kernel rotations

SSAO shader input:

G-buffer textures

position

normal

albedo color

noise texture

normal-oriented hemisphere kernel samples

range check for edge of a surface

ambient occlusion blur, to smooth noise pattern

apply ambient occlusion

multiply the per-fragment ambient occlusion factor to the lighting’s ambient component

PBR

Theory

physically based rendering

an approximation of reality, physically based shading, not physical shading

conditions to satisfy

be based on the microfacet surface model

be energy conserving

the amount of light that a material reflects should not exceed the amount of light that it receives

use a physically based BRDF(bidirectional reflective distribution function)

in:

light direction

view direction

surfae normal

microsurface roughness

out

scales or weighs

Cook-Torrance BRDF

DistributionGGX

GeometrySmith

fresnelSchilick fn

originally explored by Disney and adopted by Epic Games

Lighting

reflectance equation $$L_o(p,\omega_o) = \int\limits_{\Omega} (k_d\frac{c}{\pi} + \frac{DFG}{4(\omega_o \cdot n)(\omega_i \cdot n)}) L_i(p,\omega_i) n \cdot \omega_i d\omega_i$$

Textured PBR

IBL (Image based lighting)

diffuse irradiance

PBR and HDR

The radiance HDR file format

equirectangular map

sIBL archive

specular IBL

split sum approximation

pre-filtered environment map //TODO